Hook: What happens when a well-known crypto figure experiments with memecoins? The result is a shocking look into Web3’s consent issues, revealing flaws that go beyond cryptocurrency and touch on the core of the digital world’s trust problems.

The Experiment: A Test of Web3’s Trust and Consent Issues

Dan Finlay, the co-founder of MetaMask, didn’t just sit back and watch the memecoin trend. He decided to jump in and run his own experiment to better understand how Web3 handles the crucial issues of consent and trust. Finlay minted two memecoins: Consent on Ethereum and I Don’t Consent on Solana. The idea was simple—he wanted to see how people interacted with these tokens and how they handled the huge hype behind them.

However, the experiment quickly turned into a disaster that highlighted some major flaws in Web3. What he uncovered wasn’t just about cryptocurrency—it was about the bigger issue of user expectations in any digital platform, especially in artificial intelligence (AI), and how the lines between public visibility and user consent are dangerously blurry.

The Dark Side of Memecoins

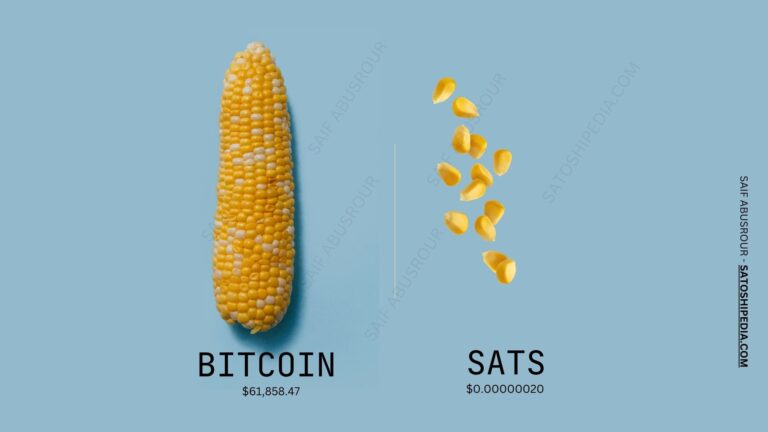

Memecoins are speculative tokens, often driven by hype rather than real value. Finlay’s experiment showed just how risky these tokens are. When he launched the two memecoins, their value shot up quickly, fueled by the frenzy of rapid trading. At one point, Finlay’s holdings were worth more than $100,000. But without any clear purpose or structure behind the coins, people began to lose money. Investors started to ask for long-term plans for tokens that had no real utility, leading to confusion and frustration.

This chaos highlighted one major issue: while people consent to put their money into these memecoins, it’s unclear what they’re actually agreeing to. The tokens themselves were undefined, and as a result, the trust between investors and creators quickly broke down. Finlay’s experiment showed that without clear structure, consent becomes meaningless.

Memecoins and Consent: A Bigger Problem for Web3 and AI

Finlay’s experiment didn’t just stay within the memecoin space. He drew parallels to issues faced by AI platforms, like Bluesky, where data was used to train AI models without users’ explicit consent. Both Web3 and AI face similar problems: the disconnect between the technical, protocol-level idea of consent and the social expectations that users have.

In both cases, consent is poorly defined and not transparently communicated. This leads to problems of trust, with users feeling misled or exploited. Finlay’s point is clear: the lack of clear and defined consent in digital platforms—whether in memecoins, AI, or other Web3 systems—creates confusion and can lead to serious financial and ethical issues.

What Needs to Change in Web3 and AI

So, what does Finlay suggest for the future? He argues that Web3 needs better tools and clearer incentives. Developers should have more control over their tokens, allowing them to restrict markets or provide structured sale methods. This would help create a more responsible and fun environment where investors aren’t left in the dark.

But it’s not just about tokens. It’s about building trust in any digital platform. Whether in AI or Web3, systems need to be clear, transparent, and respect users’ expectations. Without these systems, the trust people place in digital products will continue to be undermined.

Why This Is Important for You

This experiment is more than just a tale of memecoins. It’s a warning for anyone involved in Web3, AI, or cryptocurrency. As you dive deeper into this world, understanding the issues of consent, trust, and transparency will help you make smarter, more informed decisions. Whether you’re an investor, developer, or just a curious observer, the lessons from this experiment are critical for navigating the future of digital technology.

Key Takeaways to Remember:

- Memecoins can be highly speculative and risky, with little real value.

- Consent in Web3 and AI is often poorly defined, leading to confusion and exploitation.

- Developers need to create clearer systems that respect user expectations and build trust.

- The future of Web3 depends on making platforms more transparent and responsible.

By understanding these flaws and advocating for better systems, you can not only protect yourself but also contribute to shaping a more ethical and sustainable digital future.