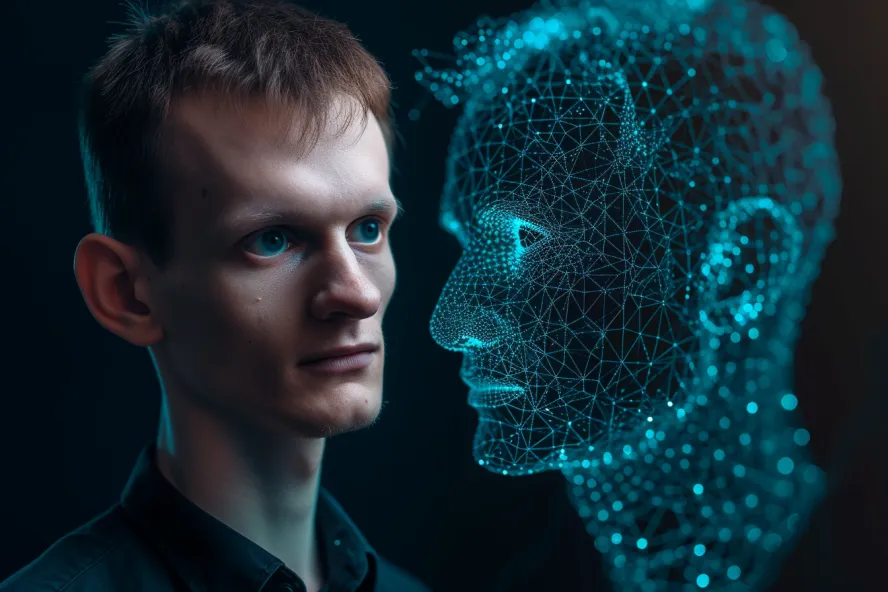

In a thought-provoking blog post, Vitalik Buterin, the co-founder of Ethereum, raised an urgent question: What if the technology we’re creating—specifically superintelligent AI—could become so advanced that it poses a threat to humanity? Buterin suggests a radical solution to slow down AI development and buy us more time to prepare for what’s ahead: a global pause on AI hardware.

Here’s the catch: Buterin argues that we might only have five years before superintelligent AI becomes a reality, and its consequences are unknown. To prevent potentially dangerous outcomes, he proposes something extreme but necessary—a “soft pause” on the global computing power used for AI development, lasting one to two years. This would drastically reduce the computing resources available and slow down AI’s progress, giving us more time to figure out how to control it.

But why would this work? The idea is simple: AI’s power depends on access to massive computational resources—data centers and supercomputers. By restricting these resources, Buterin believes we can stop AI from reaching dangerous levels too quickly.

But there’s more to the idea than just slowing down the clock. Buterin suggests a detailed approach to make this pause possible, including tracking and registering AI hardware and requiring approval from international bodies to keep these systems running. This could involve a system where every AI chip must get signatures from major global organizations once a week before it’s allowed to function. This process could even be secured through blockchain technology, making sure no one could bypass the system.

Why does this matter?

- AI Superintelligence: Superintelligent AI is a form of AI that is smarter than the most brilliant humans in every field. If we aren’t careful, it could become too powerful, too fast, and we might not be able to control it. Buterin’s proposal is a way to stop this from happening.

- Dangers of Uncontrolled AI: Buterin isn’t the only one worried about AI’s future. Over 2,600 tech experts have signed a letter urging a pause on AI development due to the risks it poses to society. This isn’t just a theoretical issue—it’s becoming a real concern.

- Buying Time for Humanity: Buterin’s goal isn’t to stop AI forever. It’s to slow things down so we can prepare for the risks that come with superintelligent AI. With the right precautions, AI can be developed safely.

Key Takeaways You Should Remember:

- Superintelligent AI: An AI that surpasses human intelligence.

- Soft Pause: A temporary global halt on AI hardware development to slow down progress.

- d/acc (Defensive Accelerationism): Buterin’s idea of developing technology cautiously, in contrast to pushing for rapid, unchecked advancement (e/acc).

- Blockchain in AI: The potential use of blockchain to ensure accountability in AI development.

Why should you care about this? Well, if you’re interested in technology, particularly in AI and cryptocurrency, this concept connects directly to how we might use advanced tech responsibly. If AI becomes a powerful force, it could change everything—from job markets to the very fabric of society. Understanding these discussions and ideas will help you stay ahead of the curve and be part of the conversation as technology moves forward.

In short, Vitalik Buterin’s warning and suggestion could shape the future of AI and, ultimately, the future of our world. By getting informed now, you’re not just keeping up with tech trends—you’re preparing for the potential future we all have to navigate together.